Up next

"Western Values" Explained

LEAVE THE WORLD BEHIND EXPLAINED: ARE YOU READY FOR WHAT'S COMING?

Annunaki Timeline Explained | Ancient Astronaut Archive

General Relativity Explained simply & visually

The BONE SNATCHING Colony Explained | The Bone Snatcher

Joe Rogan Thinks Jesus is Fake LMAO | International Criminal Court Gayness Explained

The World's First Action Hero - Gilgamesh Explained

Vertices and Edges Explained | Graph Theory Basics

The Traveling Salesman Problem Explained in under 5 mins | Graph Theory Basics

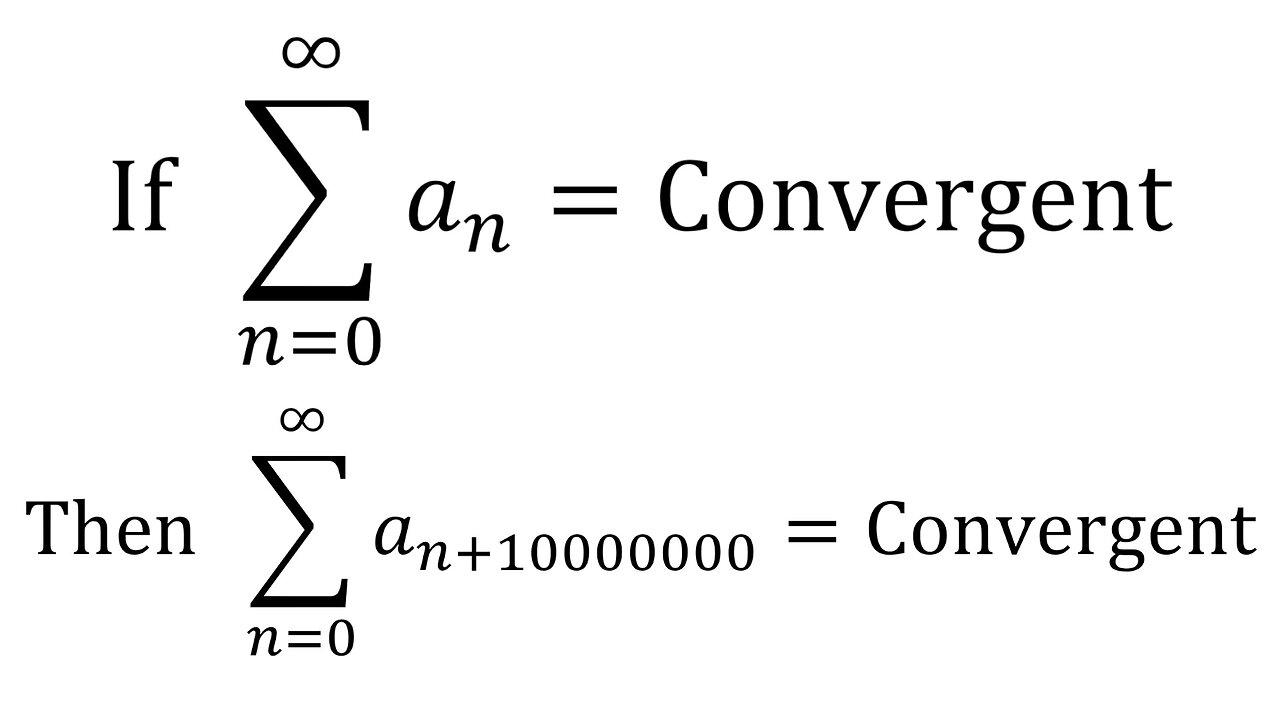

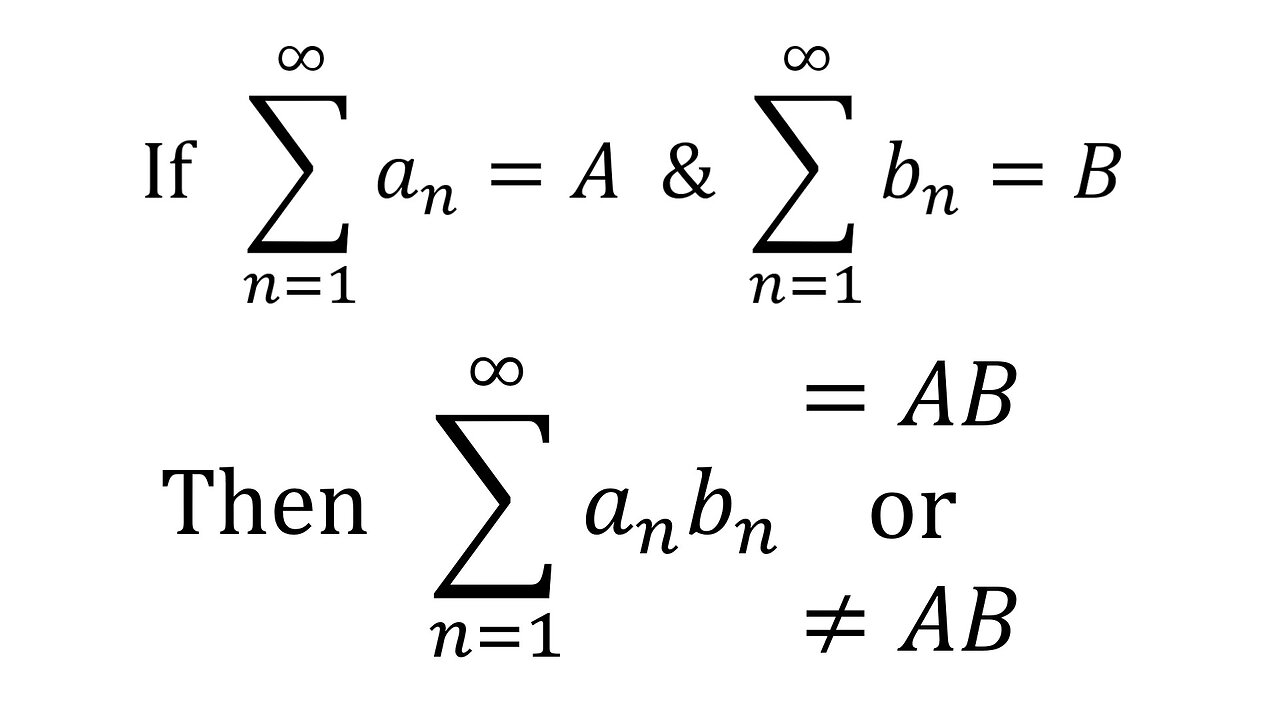

Hamiltonian Circuits and Paths Explained | Graph Theory Basics

Euler's Path and Circuit Theorems Explained | Graph Theory Basics

Spiritual Healing Explained by Hina Bhatia

Detroit's Forbidden Housing Projects Explained

Indian Army Build 70-Foot Bailey Bridge in 72 Hours in Sikkim | Explained by World Affairs

US Criticises India in Religious Freedom Report | S Jaishankar Responds | Explained by World Affairs

Saudi Arabia's $2 Trillion Disaster: Neom Line City | Explained by World Affairs

1. Worbs: when political terms have no meaning | What is Politics?

Putin's Weird Walk EXPLAINED 🤔

U.S. Space Force Mission and Focus Explained by Guardians

China vs. The World: China's Shocking Border Disputes Explained Through Maps | World Affairs

Apple Intelligence EXPLAINED (in 5 minutes)

The impeachment case against Joe Biden, explained | About That

How the Big Bang, Broken Symmetries and Quantum Gravity are Explained in the CTMU

The CTMU explained in Plain English (Reading through the Abstract)

Man With 200 IQ Gives Theory on Reality (CTMU Explained)

Attention! Your Home Internet Might Get Slowed Down Soon! #home #internet #explained #shorts

Israel "Mowing the Lawn" explained | Omar Suleiman and Lex Fridman

Python Hash Sets Explained & Demonstrated - Computerphile

NOT A "NOTHING BURGER"! Colorado Trump Ruling EXPLAINED - Pure MENTAL GYMNASTICS! Viva Frei Vlawg

The Law EXPLAINED! Federal Court Rules Trudeau's Emergencies Act UNLAWFUL! Viva Frei Vlawg

How do spacecraft orbit Earth? Angular momentum explained by NASA

Kamala Harris Claims Upcoming Election Is "Existential In Terms Of Where We Go As A Country."

No, former President Trump could not serve two more terms if reelected

The mind of a criminal explained | Matthew Cox and Lex Fridman

Feds Tracking Bank Purchases Using Terms Like 'MAGA,' 'Trump,' and 'Bible'

Satellite Images That Cannot Be Explained

Alfie Phillips | Life terms for mother and partner for murder of toddler

Buddhist Denominations Explained | Theravada vs Mahayana

Ecuador emergency explained | Why Guayaquil is prized turf for drug traffickers

Israel's 'next phase' of war with Hamas explained but 'nightmare scenario' still real fear

EXPLAINED: Why NO Muslim country wants Gazan refugees

Machine Code Explained - Computerphile

2,000,000 people set to benefit from 2024 tax package | Explained

What Is 20/20 Vision? Visual Acuity Explained | Dr. Jeff Goldberg & Dr. Andrew Huberman

Season of Saturnalia Explained - ROBERT SEPEHR

GPT-3 - explained in layman terms.

OpenAI researchers released a paper describing the development of GPT-3, a state-of-the-art language model made up of 175 billion parameters. The previous OpenAI GPT model had 1.5 billion parameters and was the biggest model back then, which was soon eclipsed by NVIDIA’s Megatron, with 8 billion parameters followed by Microsoft’s Turing NLG that had 17 billion parameters. Now, OpenAI turns the tables by releasing a model that is 10x larger than Turing NLG. Current NLP systems still largely struggle to learn from a few examples. With GPT-3, the researchers show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine-tuning approaches. Read more here : OpenAI’s GPT-3 Can Now Generate The Code For You - https://bit.ly/30GxSHM GPT-3 Is Amazing—And Overhyped - https://bit.ly/2E8XC7Z #GPT3 #NLP #OPENAI #ARTIFICIALINTELLIGENCE

- Top Comments

- Latest comments