Up next

WOW! CNN trying to SHUT DOWN Tucker Carlson’s Tour - Stay Free 388

Mike King Blows Open the 9-11 Conspiracy Pt. 1 (6.21.24 @ 8PM EST)

Will Biden's New Precedent Open Further Investigation of Benghazi?

Skull and Bones open beta review

Multiplayer Open Beta Trailer _ Call Of Dut

A Political Game of Thrones, an Open Door, Russian Warships and Battle Lines Being Drawn

HR Mart Property Tour | Cayce, SC

The #1 Source of Health Problems & Disease? | Paul Saladino

Democrats Problems Getting WORSE! haha!

From Craigslist to Philanthropist: A Wide Open Conversation with Craig Newmark and Robert Siegel

House Speaker Mike Johnson Goes OFF: "They Engineered The Open Border"

Pro-Open Borders Massachusetts Governor goes full NIMBY

Amazing Projects From Open Sauce 2024

How to Position Yourself for Your Open Doors - Pastor Paula White

Claude 3.5 - A Punch to Open AI Where it Hurts.

Mayor blames "SYSTEMIC RACISM" for Chicago's problems as he announces $500K to "STUDY REPARATIONS"

WATCH: Kim Jong-Un and Putin Travel in Open Car | Putin Visits North Korea | Times Now World

What Do You Notice About How CNN Tried To SHUT DOWN Tucker’s Tour? This Doesn’t Make Sense

Rachel Maddow HAS MELTDOWN Over Donald Trump's Potential REVENGE TOUR

Biden's Open Border is a Refuge For Criminals

The Internet Has DESTROYED Credentialism, College Degrees No Longer Open Any Doors

CNN Desperately Tries – AND FAILS – To Get Tucker’s Tour Canceled!

Cancel Culture Backlash of My UK Tour | Michael Franzese

Will Biden's New Precedent Open Further Investigation of Benghazi?

Ahead of Shutdown, Alex Jones Gives Historic Tour of Infowars Studios

Word Problems: Subtraction Without Regrouping | MathTinik

New Delhi races to open jammed flood gates

Phoenix heat record: 'like when you open an oven'

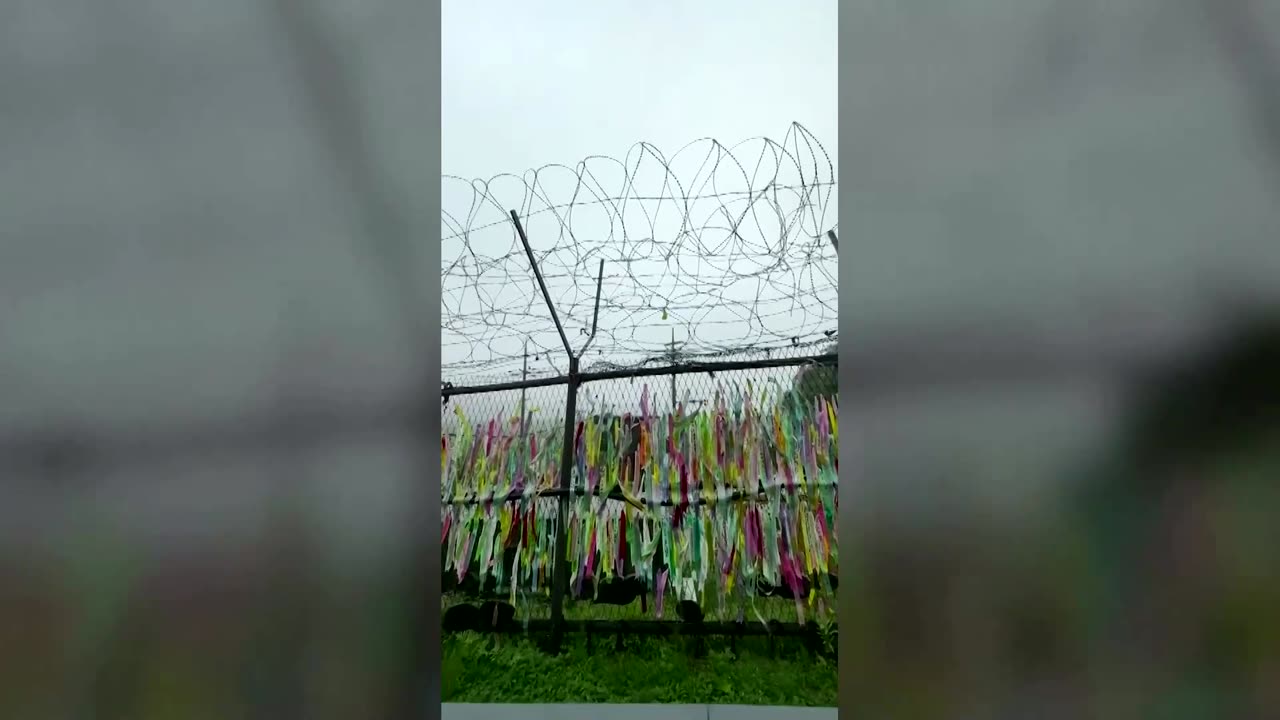

A tour of Korea's demilitarized zone

Vice President Harris Kicks Off Her Fight For Reproductive Freedoms Tour

Shaq Says Men Should Never Open Up To Women

Peter Doocy PRESSES Biden Official On Open Borders; Gets TERRIBLE Response

Vice President Harris Participates "Fight for Reproductive Freedoms" Tour Moderated Conversation

States Send Troops to Challenge Biden’s Open Border Orders Crossroads Live REC | Trailer

Houthis Reveal What They Want In Return For Hijacked Ship; Journalists Get Tour | Israel-Hamas War

We Who Wrestle With God Tour Announcement

Douglas Murray discusses 'problems' with two-state solution

🔴 ABL LIVE: Chicago Mayor Hospitalized?, Boeing Problems, Ron DeSantis, Nikki Haley, and more!

Finding Problems Out Of Nothing

Can Texas Secede As A Result Of Biden's Open Border Agenda?

Create Consistent Personalized AI Characters 𝙄𝙉𝙎𝙏𝘼𝙉𝙏𝙇𝙔! Open Source Stylization Method!

Stacey Lee Spratt Discusses HBCU Opportunities, 'Empower Me' Tour, Harlem Hops + More

Open Problems in Mechanistic Interpretability: A Whirlwind Tour

A Google TechTalk, presented by Neel Nanda, 2023/06/20 Google Algorithms Seminar - ABSTRACT: Mechanistic Interpretability is the study of reverse engineering the learned algorithms in a trained neural network, in the hopes of applying this understanding to make powerful systems safer and more steerable. In this talk Neel will give an overview of the field, summarise some key works, and outline what he sees as the most promising areas of future work and open problems. This will touch on techniques in casual abstraction and meditation analysis, understanding superposition and distributed representations, model editing, and studying individual circuits and neurons. About the Speaker: Neel works on the mechanistic interpretability team at Google DeepMind. He previously worked with Chris Olah at Anthropic on the transformer circuits agenda, and has done independent work on reverse-engineering modular addition and using this to understand grokking.

- Top Comments

- Latest comments